Tutorial: Climate Networks¶

The objective of this tutorial is to introduce climate networks, and to explain and illustrate their application with the pyunicorn package. First some theoretical background for understanding general climate networks will be given, and then some methods provided by pyunicorn.climate.ClimateNetwork will be illustrated. An introduction and application of coupled climate networks will follow. For a detailed discussion and further references, please consult Donges et al.

(2015), on which this tutorial is based.

Introduction¶

Climate networks (CN) are a way to apply complex network theory to the climate system, by assuming that each node represents a varying dynamical system. Of interest is then the collective behaviour of these interacting dynamical systems and the structure of the resulting network. This approach was first introduced by Tsonis and Roebber (2004).

CN analysis is a versatile approach for investigating climatological data, and it can be used as a complementary method to classical techniques from multivariate statistics. The approach allows for the analysis of single fields of climatological time series, e.g., surface air temperature observed on a grid, or even two or more fields. It has been successfully applied in many cases, for example to dynamics and predictability of the El Niño Phenomenon (Radebach et al., 2013).

Theory of Climate Networks (CNs)¶

CNs are a typical application of functional networks, which allow to study the dynamical relationships between subsystems of a high-dimensional complex system by constructing networks from it. pyunicorn provides classes for the construction and analysis of such networks, representing the statistical interdependency structure within and between fields of time series using various similarity measures.

Coupling Analysis¶

CNs represent strong statistical interrelationships between time series of climatological fields. These statistical interrelationships can be estimated with methods from the funcnet.CouplingAnalysis class in terms of matrices of statistical similarities \(\textbf{S}\), such as the (lagged) classical linear Pearson product-moment correlation coefficient (CC). The CC of two zero-mean time series variables \(X,Y\), as implemented in funcnet.CouplingAnalysis.cross_correlation(),

is given by

which depends on the covariance \(\langle X_{t-\tau}, Y_t \rangle\) and the standard deviations \(\sigma_X, \sigma_Y\). Lags \(\tau > 0\) correspond to the linear association of past values of \(X\) with \(Y\), and vice versa for \(\tau < 0\).

Similarity Measures for CNs¶

By thresholding the matrix of a statistical similarity measure \(\textbf{S}\), the interrelationships between time series of climate networks can be reconstructed:

where \(\Theta\) is the Heaviside function, \(\beta\) denotes a threshold parameter, and \(A_{pp} = 0\) for all nodes \(p\) to exclude self-loops. A CN that is reconstructed using the Pearson CC from above is called a Pearson correlation CN.

Constructing CNs¶

Having established some basic theoretic background, we will now use pyunicorn to construct a CN. We start by importing the required packages, by downloading an example NOAA dataset, and by specifying some metadata for it.

[1]:

import numpy as np

from matplotlib import pyplot as plt

from pyunicorn import climate

[2]:

DATA_NAME = "air.mon.mean.nc"

DATA_URL = f"https://psl.noaa.gov/repository/entry/get/{DATA_NAME}?entryid=synth%3Ae570c8f9-ec09-4e89-93b4-babd5651e7a9%3AL25jZXAucmVhbmFseXNpcy5kZXJpdmVkL3N1cmZhY2UvYWlyLm1vbi5tZWFuLm5j"

DATA_FILE = f"./data/{DATA_NAME}"

![ -f {DATA_FILE} ] || wget -O {DATA_FILE} -nv --show-progress "{DATA_URL}"

./data/air.mon.mean 100%[===================>] 27.46M 10.1MB/s in 2.7s

2024-02-05 03:01:16 URL:https://psl.noaa.gov/repository/entry/get/air.mon.mean.nc?entryid=synth%3Ae570c8f9-ec09-4e89-93b4-babd5651e7a9%3AL25jZXAucmVhbmFseXNpcy5kZXJpdmVkL3N1cmZhY2UvYWlyLm1vbi5tZWFuLm5j [28791289/28791289] -> "./data/air.mon.mean.nc" [1]

[3]:

DATA_FILENAME = "./data/air.mon.mean.nc"

# Indicate data source (optional)

DATA_SOURCE = "ncep_ncar_reanalysis"

# Type of data file ("NetCDF" indicates a NetCDF file with data on a regular

# lat-lon grid, "iNetCDF" allows for arbitrary grids - > see documentation).

FILE_TYPE = "NetCDF"

# Name of observable in NetCDF file ("air" indicates surface air temperature

# in NCEP/NCAR reanalysis data)

OBSERVABLE_NAME = "air"

# Select a region in time and space from the data (here the whole dataset)

WINDOW = {"time_min": 0., "time_max": 0., "lat_min": 0, "lon_min": 0,

"lat_max": 30, "lon_max": 0}

# Indicate the length of the annual cycle in the data (e.g., 12 for monthly

# data). This is used for calculating climatological anomaly values.

TIME_CYCLE = 12

Now we set some parameters for the CN construction, the first being the threshold \(\beta\) from above, and create a ClimateData object containing our data.

[4]:

# For setting fixed threshold

THRESHOLD = 0.5

# For setting fixed link density

LINK_DENSITY = 0.005

# Indicates whether to use only data from winter months (DJF) for calculating

# correlations

WINTER_ONLY = False

data = climate.ClimateData.Load(

file_name=DATA_FILENAME, observable_name=OBSERVABLE_NAME,

data_source=DATA_SOURCE, file_type=FILE_TYPE,

window=WINDOW, time_cycle=TIME_CYCLE)

print(data)

Reading NetCDF File and converting data to NumPy array...

Global attributes:

description: Data from NCEP initialized reanalysis (4x/day). These are the 0.9950 sigma level values

platform: Model

Conventions: COARDS

NCO: 20121012

history: Thu May 4 20:11:16 2000: ncrcat -d time,0,623 /Datasets/ncep.reanalysis.derived/surface/air.mon.mean.nc air.mon.mean.nc

Thu May 4 18:11:50 2000: ncrcat -d time,0,622 /Datasets/ncep.reanalysis.derived/surface/air.mon.mean.nc ./surface/air.mon.mean.nc

Mon Jul 5 23:47:18 1999: ncrcat ./air.mon.mean.nc /Datasets/ncep.reanalysis.derived/surface/air.mon.mean.nc /dm/dmwork/nmc.rean.ingest/combinedMMs/surface/air.mon.mean.nc

/home/hoop/crdc/cpreanjuke2farm/cpreanjuke2farm Mon Oct 23 21:04:20 1995 from air.sfc.gauss.85.nc

created 95/03/13 by Hoop (netCDF2.3)

Converted to chunked, deflated non-packed NetCDF4 2014/09

title: monthly mean air.sig995 from the NCEP Reanalysis

dataset_title: NCEP-NCAR Reanalysis 1

References: http://www.psl.noaa.gov/data/gridded/data.ncep.reanalysis.derived.html

Variables (size):

lat (73)

lon (144)

time (913)

air (913)

ClimateData:

Data: 10512 grid points, 9597456 measurements.

Geographical boundaries:

time lat lon

min 1297320.0 -90.00 0.00

max 1963536.0 90.00 357.50

Next, we construct a CN based on the Pearson CC, without lag and with fixed threshold. Alternatively, several other similarity measures and construction mechanisms may be used as well.

[5]:

net = climate.TsonisClimateNetwork(

data, threshold=THRESHOLD, winter_only=WINTER_ONLY)

Generating a Tsonis climate network...

Calculating daily (monthly) anomaly values...

Calculating correlation matrix at zero lag from anomaly values...

Extracting network adjacency matrix by thresholding...

Setting area weights according to type surface ...

Setting area weights according to type surface ...

[6]:

# Create a climate network based on Pearson correlation without lag and with

# fixed link density

# net = climate.TsonisClimateNetwork(

# data, link_density=LINK_DENSITY, winter_only=WINTER_ONLY)

[7]:

# Create a climate network based on Spearman's rank order correlation without

# lag and with fixed threshold

# net = climate.SpearmanClimateNetwork(

# data, threshold=THRESHOLD, winter_only=WINTER_ONLY)

[8]:

# Create a climate network based on mutual information without lag and with

# fixed threshold

# net = climate.MutualInfoClimateNetwork(

# data, threshold=THRESHOLD, winter_only=WINTER_ONLY)

We finish by calculating some basic network measures for the resulting CN, optionally saving them to text files.

[9]:

print("Link density:", net.link_density)

degree = net.degree()

closeness = net.closeness()

betweenness = net.betweenness()

clustering = net.local_clustering()

ald = net.average_link_distance()

mld = net.max_link_distance()

# Save the grid (mainly vertex coordinates) to text files

#data.grid.save_txt(filename="grid.txt")

# Save the degree sequence. Other measures may be saved similarly.

#np.savetxt("degree.txt", degree)

Link density: 0.026892009342390742

Calculating closeness...

Calculating node betweenness...

Calculating local clustering coefficients...

Calculating average link distance...

Calculating angular great circle distance...

Calculating maximum link distance...

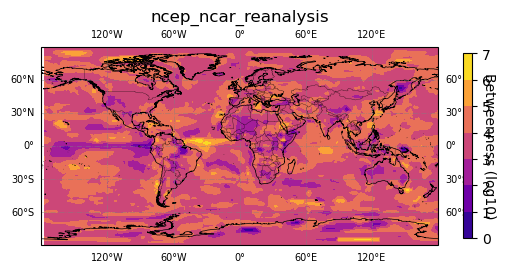

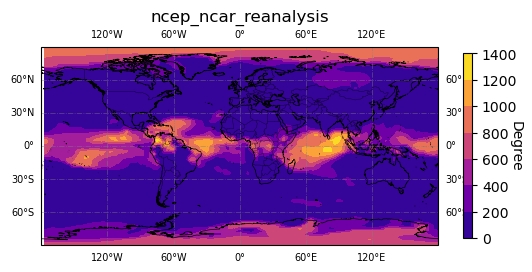

Plotting CNs¶

pyunicorn provides a basic plotting feature based on the cartopy and matplotlib packages, which can be used to have a first look at the generated data. We start by initializing a MapPlot object:

[10]:

# create a Cartopy plot instance called map_plot

# from the data with title DATA_SOURCE

map_plot = climate.MapPlot(data.grid, DATA_SOURCE)

With MapPlot.plot(), we can now plot some of our previously calculated measures on the given grid.

[11]:

# plot degree

map_plot.plot(degree, "Degree")

# add matplotlib.pyplot or cartopy commands to customize figure

plt.set_cmap('plasma')

# optionally save figure

#plt.savefig('degree.png')

Try plotting more measures if you like.

[12]:

# plot betwenness

map_plot.plot(np.log10(betweenness + 1), "Betweenness (log10)")

# add matplotlib.pyplot or cartopy commands to customize figure

plt.set_cmap('plasma')

# optionally save figure

#plt.savefig('degree.png')