The tune of a drifting pattern

Well, so we made this.

But what is this we made?

Pattern: repeat

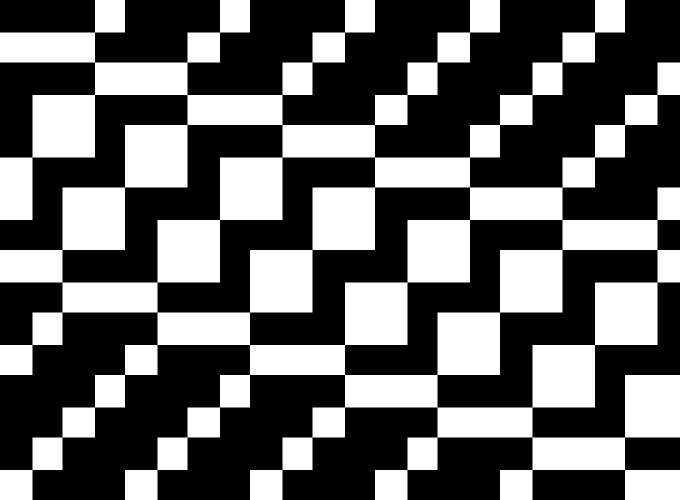

My wife is a textile and surface designer and somewhat obsessed with weave designs. More specifically, with very basic weave patterns that can be encoded as binary matrices like this:

Without going too much into details, weaving has two basic components that are similar from the frames you probably know from childhood all the way to industrial looms; the warp and weft. The longitudinal warp yarns are held stationary under tension on a frame or loom while the weft is drawn through and inserted over and under the warp.

So in the picture above, each of the six patterns encodes a certain weave design. Each column represents an individual warp thread. As the weft goes through row by row, it goes over the warp thread at a black cell and under it at a white cell. The patterns wrap around vertically and horizontally so in this case the width must be a multiple of 16 (number of columns) and the length to complete the pattern is a multiple of 48 (number of rows).

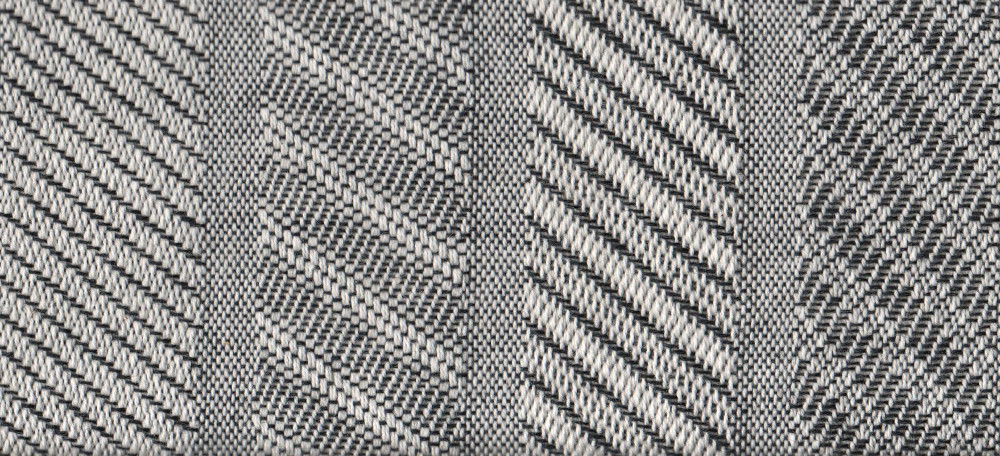

Real world things

This is how the woven fabrics look (they are from different designs and rotated 90°).

Scanned weave samples

The music of curtains

In the old days these were then turned into punch cards that were fed to a loom to produce the desired weave. However, if you can feed it to a loom you can also feed it to a barrel organ. Obviously.

For this you can use, for example, actual mini barrel organs (which we did), or, you can create digital ones to transform the patterns into a catchy little tune. We did this using the pyo module for digital signal processing. This is probably overkill but it was fun to conceptually look at the low level pattern to sound transformation. The initial idea was to simply assign a note (frequency) to each of the 16 shafts (columns) and then step through the pattern row by row and play a sound for every black cell.

from PIL import Image

import numpy as np

from pyo import *

dir_project = "/path/to/data/"

im_pattern = Image.open(dir_project + 'p4.png')

patrone = np.array(im_pattern)[:,:,3]

patrone = patrone.transpose()/255

weft_size = patrone.shape[0]

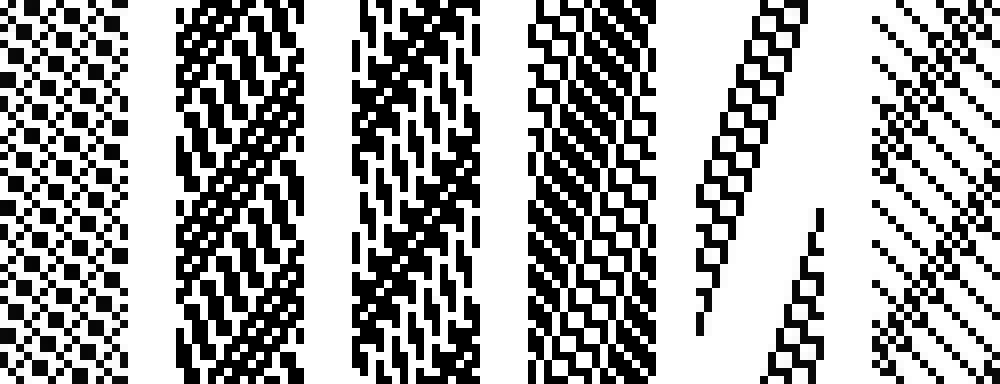

warp_size = patrone.shape[1]We first load the png pattern image from file using PIL (pyhton imaging library). We then discard everything but the alpha channel and normalize it to values in [0,1]. As values where either 0 or 255, values are now either 0 or 1. In this example, pattern p4 transposed looks like this:

Pattern 4

musical_scale = np.genfromtxt(dir_project + 'ganztoene.csv', delimiter=',')

start_note = 15

notes = list(musical_scale[:,1])[start_note:start_note+warp_size]

trig_notes = np.transpose(patrone*notes).tolist()We next load a csv file containing a musical scale and select a row in the file we want to take as starting note. In our case this file simply contains a list of frequencies.

| Name | Frequency (hz) |

|---|---|

| H | 123.47 |

| c | 130.81 |

| d | 146.83 |

| e | 164.81 |

| f | 174.61 |

| g | 196.00 |

The last line converts the binary matrix into a list of triggers at the respective frequencies of the 16 shafts.

soundDevice = 7

s = Server()

s.setInOutDevice(soundDevice)

s.setDuplex(0)

s.boot()

s.setAmp(0.2)These lines only initialize and boot the pyo sound server. You might have to set a different sound device depending on your hardware (see available devices with pa_list_devices())

### DURATIONS

#duration = [1]

#duration = [1,0.5]

duration = [2,1,0.5,0.25]

#duration = [1,0.75,0.5,0.25]

#duration = [2,1.75,1.5,1.25,1,0.75,0.5,0.25]

#duration = [0.25,0.5,1,2]

#duration = [2,1,0.5,0.25, 0.25, 0.5, 1, 2]It turned out that simply playing the pattern as planned was not very satisfying. Especially in the patterns with lots of black cells in one row, there were too many notes played at one time. We decided on two different ways to somewhat untangle the patterns in time. One idea was to summarize all consecutive black cells in a shaft into one trigger, using the length as tone duration. The other one, that I show here, was to allow different durations (i.e. speeds) for each shaft. The duration vector is wrapped around so duration = [1,0.5] means that the shafts alternatve between full length sounds and half length sounds (in seconds).

With the setting above, pattern 4 eventually sounds like this.

m = Metro(duration).play()

c = Counter(m ,min=0, max=weft_size)

t_n = DataTable(size=weft_size, init=trig_notes, chnls=warp_size)

f1 = TableIndex(t_n, c)We create an indexed data table the size of the pattern that contains all frequencies played at each time step and a counter and a metronome for each duration.

#env = HannTable()

#env = CosTable([(0,0), (100,0.5), (500, 0.3), (4096,0.3), (8192,0)])

#env = ChebyTable([1,0,.33,0,.2,0,.143,0,.111])

#env = HarmTable([1,0,.33,0,.2,0,.143,0,.111])

#env = CosTable([(0,0), (100,1), (1000,.25), (8191,0)])

env = CosTable([(0,0), (50,1), (250,.3), (8191,0)])

amp = TrigEnv(m, table=env, dur=(np.asarray(duration)*0.9).tolist(), mul=0.7)

#si = FastSine(freq=f1, mul=amp*0.9)

si = Sine(freq=f1, mul=amp*0.9)

#si = SuperSaw(freq=f1, detune=0.5, bal=0.7, mul=amp*0.9, add=0)

#si = LFO(freq=f1, sharp=0.5, type=7, mul=amp*0.9)

#si = SumOsc(freq=f1, mul=amp*0.9)

#h = Chorus(si, depth=[1.5,1.6], feedback=0.5, bal=0.5)

#h = Harmonizer(si, transpo=-5, winsize=0.05)

#h = STRev(si)

h = Freeverb(si)

#h = si

snd = h.out()The commented out lines are all variations to play around with. First are envelopes of different shapes. A trigger environment reads the envelopes each time it receives a trigger. A sound source (here a simple sine wave) and some effect.

h.ctrl(title="Effect")

si.ctrl(title="Sound Source")

amp.ctrl(title = "Amplifier")

m.ctrl(title="Metronome")

sc = Scope(h)

sp = Spectrum(si)

s.gui(locals())This last bit only starts the gui and some control elements. That’s it!

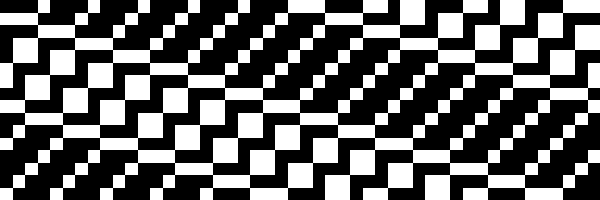

Drifting patterns video

Finally, we visualized the patterns created by the asynchronous movement of the different shafts to get the video at the top of the page.

from PIL import Image

import numpy as np

import math

# path to pattern files

dir_data = '/path/to/patterns/'

# pattern file name

fn_pattern = "p4"

# prefix for image output

fn_img_prefix = "pattern_" + fn_pattern

# frames per second to render

fps = 10

# total duration of animation

total_duration = 48

# set duration of different columns (frames of the loom) in pattern (wraparound), set [1] for equal duration

#duration = [1,0.5,0.25]

duration = [0.25,0.5,1,2]

# load pattern image

im_pattern = Image.open(dir_data + fn_pattern + '.png')

patrone = np.array(im_pattern)[:,:,3]

patrone = patrone.transpose()/255

# Calculate column speed

speed = 1/np.asarray(duration)

rows = patrone.shape[0]

cols = patrone.shape[1]

m_size = rows*10

p_rows = int(m_size)

p_cols = int(m_size/(rows/cols))

r_offset = int(p_rows*0.25)

for t in range(total_duration*fps):

im = np.ones([p_rows,p_cols])

for col in range(cols):

for row in range(rows):

if (patrone[row,col] == 1):

brush = 0.0

distance = math.fabs((row*int(p_rows/rows)-(p_rows/rows)*t*speed[col%len(speed)]*(1/fps))%p_rows)

if (distance > (rows-5)*int(p_rows/rows)):

d = 0.6-(rows*int(p_rows/rows) - distance)/int(p_rows/rows)*0.1

brush = d

for pcc in range(int(p_cols/cols)):

pxc = col*int(p_cols/cols)+pcc

for prc in range(int(p_rows/rows)):

pxr = (r_offset+row*int(p_rows/rows)+prc-(p_rows/rows)* int(t*speed[col%len(speed)])*(1/fps))%p_rows

im[int(pxr),int(pxc)] = brush

for i in range(p_cols):

im[r_offset-1,i] = 0.5

im[r_offset,i] = 0.5

im[r_offset+1,i] = 0.5

ima = Image.fromarray(np.uint8(im*255))

ima.save(dir_data + 'movie/' + fn_img_prefix + '_' + str(t+1) + '.png')

import io

import os

import subprocess

def convert_video(pfn_img_prefix, pfps):

fn_movie = fn_img_prefix + "_" + str(pfps) + ".mp4"

os.chdir(dir_data + "movie/")

command = "rm " + fn_movie

subprocess.call(command,shell=True)

command = "ffmpeg -r " + str(pfps) + " -f image2 -i " + pfn_img_prefix + "_%d.png -vcodec libx264 -crf 1 -pix_fmt yuv420p {output}".format(output=fn_movie)

#print(command)

subprocess.call(command,shell=True)

command = "rm *.png"

subprocess.call(command,shell=True)

convert_video(fn_img_prefix, fps)